根据CAP理论,一致性(C),可用性(A),分区容错性(P),三者不可兼得,必须有所取舍。而传统数据库保 证了强一致性(ACID模型)和高可用性,所以要想实现一个分布式数据库集群非常困难,这也解释了为什么数据库的扩展能力十分有限。而近年来不断发展壮大 的NoSQL运动,就是通过牺牲强一致性,采用BASE模型,用最终一致性的思想来设计分布式系统,从而使得系统可以达到很高的可用性和扩展性。

24.12.10

14.11.10

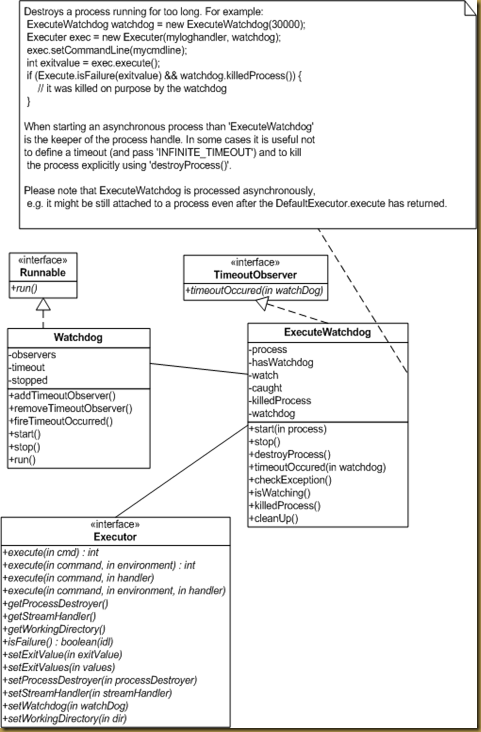

Why Apache Commons Exec Needs the Watchdog Working as Observer?

Currently, I can’t see the requirements.

Watchdog & Observer Design Pattern

What is Observer Pattern

http://en.wikipedia.org/wiki/Observer_pattern

The observer pattern (a subset of the publish/subscribe pattern) is a software design pattern in which an object, called the subject, maintains a list of its dependents, called observers, and notifies them automatically of any state changes, usually by calling one of their methods. It is mainly used to implement distributed event handling systems.

10.11.10

How to Create a Right Click Menu in Windows 7 for GVim or Other Green Program?

Very simple.

- regedit

- HKEY_CLASSES_ROOT/*/shell

- Create a key with any name you like, e.g. “Edit with GVim”. After that, please test it with you Explorer by right click on any files. You will see a new menu item there.

- Create a new key as “command” underneath. You must use “command” as the key.

- Set the string value of command key: <command path> “%1”

- e.g.: C:\Tools\vim73\gvim “%1”

That’s it.

8.11.10

Understanding Simple Network Management Protocol (SNMP) Traps

http://www.cisco.com/en/US/tech/tk648/tk362/technologies_tech_note09186a0080094aa5.shtml

SNMPv1 traps are defined in RFC 1157, with these fields:

-

Enterprise—Identifies the type of managed object that generates the trap.

-

Agent address—Provides the address of the managed object that generates the trap.

-

Generic trap type—Indicates one of a number of generic trap types.

-

Specific trap code—Indicates one of a number of specific trap codes.

-

Time stamp—Provides the amount of time that has elapsed between the last network reinitialization and generation of the trap.

-

Variable bindings—The data field of the trap that contains PDU. Each variable binding associates a particular MIB object instance with its current value.

Standard generic traps are: coldStart, warmStart, linkDown, linkUp, authenticationFailure, egpNeighborLoss. For generic SNMPv1 traps, Enterprise field contains value of sysObjectID of the device that sends trap. For vendor specific traps, Generic trap type field is set to enterpriseSpecific(6).

In SNMPv2c trap is defined as NOTIFICATION and formatted differently compared to SNMPv1. It has these parameters:

-

sysUpTime—This is the same as Time stamp in SNMPv1 trap.

-

snmpTrapOID —Trap identification field. For generic traps, values are defined in RFC 1907, for vendor specific traps snmpTrapOID is essentially a concatenation of the SNMPv1 Enterprise parameter and two additional sub-identifiers, '0', and the SNMPv1 Specific trap code parameter.

-

VarBindList—This is a list of variable-bindings.

In order for a management system to understand a trap sent to it by an agent, the management system must know what the object identifier (OID) defines. Therefore, it must have the MIB for that trap loaded. This provides the correct OID information so that the network management system can understand the traps sent to it.

3.11.10

Trap in SNMP4j-Agent

SNMP Trap is something very interesting. The Agent is responsible for sending trap/notification, while the client works in the daemon mode to receive trap messages.

Who is the server? Well, server is not a concept in SNMP v1 and v2. People call them agents.

How Trap is Implemented in SNMP4J Agent?

That is a good question. Just take a look at BaseAgent. Here is some excerpt from SNMP4J Agent 1.4.1.

134 /**

135 * Initialize transport mappings, message dispatcher, basic MIB modules,

136 * proxy forwarder, VACM and USM security, and custom MIB modules and objects

137 * provided by sub-classes.

138 *

139 * @throws IOException

140 * if initialization fails because transport initialization fails.

141 */

142 public void init() throws IOException {

143 agentState = STATE_INIT_STARTED;

144 initTransportMappings();

145 initMessageDispatcher();

146 server.addContext(new OctetString());

147 snmpv2MIB = new SNMPv2MIB(sysDescr, sysOID, sysServices);

148

149 // register Snmp counters for updates

150 dispatcher.addCounterListener(snmpv2MIB);

151 agent.addCounterListener(snmpv2MIB);

152 snmpFrameworkMIB =

153 new SnmpFrameworkMIB((USM)

154 mpv3.getSecurityModel(SecurityModel.SECURITY_MODEL_USM),

155 dispatcher.getTransportMappings());

156 usmMIB = new UsmMIB(usm, SecurityProtocols.getInstance());

157 usm.addUsmUserListener(usmMIB);

158

159 vacmMIB = new VacmMIB(new MOServer[] { server });

160 snmpTargetMIB = new SnmpTargetMIB(dispatcher);

161 snmpNotificationMIB = new SnmpNotificationMIB();

162 snmpCommunityMIB = new SnmpCommunityMIB(snmpTargetMIB);

163 initConfigMIB();

164 snmpProxyMIB = new SnmpProxyMIB();

165 notificationOriginator =

166 new NotificationOriginatorImpl(session, vacmMIB,

167 snmpv2MIB.getSysUpTime(),

168 snmpTargetMIB, snmpNotificationMIB);

169 snmpv2MIB.setNotificationOriginator(agent);

170

171 setupDefaultProxyForwarder();

172 // add USM users

173 addUsmUser(usm);

174 // add SNMPv1/v2c community to SNMPv3 security name mappings

175 addCommunities(snmpCommunityMIB);

176 addViews(vacmMIB);

177 addNotificationTargets(snmpTargetMIB, snmpNotificationMIB);

178

179 registerSnmpMIBs();

180 }

At line 161, an SnmpNotificationMIB instance is created. From line 165 to 168, a NotificationOriginator is created by using NotificationOriginatorImpl. Line 169 sets up the notification originator for SNMPv2 MIB object, while line 177 calls the abstract method, addNotificationTargets() to add more things into the target MIB and notification MIB.

addNotificationTargets()?

If you are using BaseAgent just like I do now, overriding addNotificationTargets() method could be a very good access point for your trap targets.This method is abstract in BaseAgent, and you must override them, although you can just put it as empty in your derived class.

Target MIB?

The method addNotificationTargets() uses two parameters: Target MIB and Notification MIB.

Target MIB is an instance of SnmpTargetMIB class. It is initialized at line 160 above with a dispatcher, which is an instance of MessageDispatcherImpl, initialized by initMessageDispatcher().

From the SNMP protocol’s point of view, SnmpTargetMIB takes care of SNMP Target Objects in SNMP-TARGET-MIB, which is a version 2 MIB.

OID Base

| snmpTargetObjects |

| 1.3.6.1.6.3.12.1 |

iso(1). org(3). dod(6). internet(1). snmpV2(6). snmpModules(3). snmpTargetMIB(12). snmpTargetObjects(1) |

Details

| snmpTargetObjects | GROUP | 1.3.6.1.6.3.12.1 iso(1). org(3). dod(6). internet(1). snmpV2(6). snmpModules(3). snmpTargetMIB(12). snmpTargetObjects(1) | |||||

| snmpTargetSpinLock | SCALAR | read-write | TestAndIncr | 1.3.6.1.6.3.12.1.1.0 | |||

| snmpTargetAddrTable | TABLE | not-accessible | SEQUENCE OF | 1.3.6.1.6.3.12.1.2 | |||

| snmpTargetAddrEntry | ENTRY | not-accessible | SnmpTargetAddrEntry | 1.3.6.1.6.3.12.1.2.1 | |||

| snmpTargetAddrName | TABULAR | not-accessible | SnmpAdminString ( 1..32 ) | 1.3.6.1.6.3.12.1.2.1.1 | |||

| snmpTargetAddrTDomain | TABULAR | read-create | TDomain | 1.3.6.1.6.3.12.1.2.1.2 | |||

| snmpTargetAddrTAddress | TABULAR | read-create | TAddress | 1.3.6.1.6.3.12.1.2.1.3 | |||

| snmpTargetAddrTimeout | TABULAR | read-create | TimeInterval | 1.3.6.1.6.3.12.1.2.1.4 | |||

| snmpTargetAddrRetryCount | TABULAR | read-create | Integer32 ( 0..255 ) | 1.3.6.1.6.3.12.1.2.1.5 | |||

| snmpTargetAddrTagList | TABULAR | read-create | SnmpTagList | 1.3.6.1.6.3.12.1.2.1.6 | |||

| snmpTargetAddrParams | TABULAR | read-create | SnmpAdminString ( 1..32 ) | 1.3.6.1.6.3.12.1.2.1.7 | |||

| snmpTargetAddrStorageType | TABULAR | read-create | StorageType | 1.3.6.1.6.3.12.1.2.1.8 | |||

| snmpTargetAddrRowStatus | TABULAR | read-create | RowStatus | 1.3.6.1.6.3.12.1.2.1.9 | |||

| snmpTargetParamsTable | TABLE | not-accessible | SEQUENCE OF | 1.3.6.1.6.3.12.1.3 | |||

| snmpTargetParamsEntry | ENTRY | not-accessible | SnmpTargetParamsEntry | 1.3.6.1.6.3.12.1.3.1 | |||

| snmpTargetParamsName | TABULAR | not-accessible | SnmpAdminString ( 1..32 ) | 1.3.6.1.6.3.12.1.3.1.1 | |||

| snmpTargetParamsMPModel | TABULAR | read-create | SnmpMessageProcessingModel | 1.3.6.1.6.3.12.1.3.1.2 | |||

| snmpTargetParamsSecurityModel | TABULAR | read-create | SnmpSecurityModel ( 1..2147483647 ) | 1.3.6.1.6.3.12.1.3.1.3 | |||

| snmpTargetParamsSecurityName | TABULAR | read-create | SnmpAdminString | 1.3.6.1.6.3.12.1.3.1.4 | |||

| snmpTargetParamsSecurityLevel | TABULAR | read-create | SnmpSecurityLevel | 1.3.6.1.6.3.12.1.3.1.5 | |||

| snmpTargetParamsStorageType | TABULAR | read-create | StorageType | 1.3.6.1.6.3.12.1.3.1.6 | |||

| snmpTargetParamsRowStatus | TABULAR | read-create | RowStatus | 1.3.6.1.6.3.12.1.3.1.7 | |||

| snmpUnavailableContexts | SCALAR | read-only | Counter32 | 1.3.6.1.6.3.12.1.4.0 | |||

| snmpUnknownContexts | SCALAR | read-only | Counter32 | 1.3.6.1.6.3.12.1.5.0 | |||

What can I do with SNMP4J Agent?

You might probably don’t care about SNMP Target MIB. All you need could only by how to use SNMP4J Agent. Good.

Although the Java API documentation for SNMP4J Agent is very useless, they provided meaningful naming, as well as, and more importantly, source code. That is way we like open-source, right?

Notification MIB?

27.10.10

Aspect Oriented Programming with Spring AspectJ and Maven

http://www.javacodegeeks.com/2010/07/aspect-oriented-programming-with-spring.html

Spring framework comes with AOPsupport. In fact, as stated in Spring reference documentation,

“One of the key components of Spring is the AOP framework. While the Spring IoC container does not depend on AOP, meaning you do not need to use AOP if you don't want to, AOP complements Spring IoC to provide a very capable middleware solution. AOP is used in the Spring Framework to...

- ... provide declarative enterprise services, especially as a replacement for EJB declarative services. The most important such service is declarative transaction management.

- ... allow users to implement custom aspects, complementing their use of OOP with AOP.”

Nevertheless Spring AOP framework comes with certain limitations in comparison to a complete AOP implementation, such as AspectJ. The most common problems people encounter while working with Spring AOP framework derive from the fact that Spring AOPis “proxy – based”. In other words when a bean is used as a dependency and its method(s) should be advised by particular aspect(s) the IoC container injects an “aspect – aware” bean proxy instead of the bean itself. Method invocations are performed against the proxy bean instance, transparently to the user, in order for aspect logic to be executed before and/or after delegating the call to the actual “proxy–ed” bean.

Furthermore Spring AOP framework uses either JDK dynamic proxies or CGLIB to create the proxy for a given target object. The first one can create proxies only for the interfaces whilst the second is able to proxy concrete classes but with certain limitations. In particular, as stated in Spring reference documentation,

“If the target object to be proxied implements at least one interface then a JDK dynamic proxy will be used. All of the interfaces implemented by the target type will be proxied. If the target object does not implement any interfaces then a CGLIB proxy will be created.”

To summarize, when working with Spring AOP framework you should have two important things in mind :

- If your “proxy–ed” bean implements at least one interface, the proxy bean can ONLY be casted to that interface(s). If you try to cast it to the “proxy–ed” bean class, then you should expect a ClassCastException to be thrown at runtime. NeverthelessSpring AOP framework provides the option to force CGLIB proxying but with the aforementioned limitations (please refer to the relevant chapter of Spring reference documentation)

- Aspects do not apply to intra–operation calls. Meaning that there is no way for the proxy to intercept the calling of a method originated from another method of the same “proxy–ed” bean

- compile time weaving – compile either target source or aspect classes via theAspectJ compiler

- post compile weaving – inject aspect instructions to already compiled classes

- load time weaving – inject aspect instructions to the byte code during class loading

Spring users who have already implemented aspects for their bean services can switch toAspectJ transparently, meaning that no special code needs to be written since Spring AOPframework uses a subset of AspectJ pointcut expression language, and @AspectJ Springaspects are fully eligible for AspectJ weaving.

Our preferred development environment is Eclipse, so as a prerequisite you must haveEclipse with Maven support installed. The installation of Maven plugin for Eclipse is out of the scope of this tutorial and will not be discussed. Nevertheless you will need the following components : We will be using Eclipse Galileo, „m2eclipse“ Maven Integration for Eclipse Plugin version 0.10.0, Spring version 3.0.1, aspectjrt version 1.6.7 and aspectj-maven-plugin version 1.3 for this tutorial.

Lets begin,

- Create a new Maven project, go to File → Project → Maven → Maven Project

- In the „Select project name and location“ page of the wizard, make sure that „Create a simple project (skip archetype selection)“ option is unchecked, hit „Next“ to continue with default values

- In the „Select an Archetype“ page of the wizard, select „Nexus Indexer“ at the „Catalog“ drop down list and after the archetypes selection area is refreshed, select the „webapp-jee5“ archetype from „org.codehaus.mojo.archetypes“ to use. You can use the „filter“ text box to narrow search results. Hit „Next“ to continue

- In the „Enter an artifact id“ page of the wizard, you can define the name and main package of your project. We will set the „Group Id“ variable to „com.javacodegeeks“ and the „Artifact Id“ variable to „aspectjspring“. The aforementioned selections compose the main project package as „com.javacodegeeks.aspectjspring “ and the project name as „aspectjspring“. Hit „Finish“ to exit the wizard and to create your project

Let's recap a few things about the Maven Web project structure

- /src/main/java folder contains source files for the dynamic content of the application

- /src/test/java folder contains all source files for unit tests

- /src/main/webapp folder contains essential files for creating a valid web application, e.g. „web.xml“

- /target folder contains the compiled and packaged deliverables

- The „pom.xml“ is the project object model (POM) file. The single file that contains all project related configuration.

- Locate the „Properties“ section at the „Overview“ page of the POM editor and perform the following changes :

- Create a new property with name org.springframework.version and value 3.0.1.RELEASE

- Create a new property with name maven.compiler.source and value according to the version of your Java runtime environment, we will use1.6

- Create a new property with name maven.compiler.target and value according to the version of your Java runtime environment, we will use1.6

- Navigate to the „Dependencies“ page of the POM editor and create the following dependencies (you should fill the „GroupId“, „Artifact Id“ and „Version“ fields of the „Dependency Details“ section at that page) :

- Group Id : org.springframework Artifact Id : spring-web Version :${org.springframework.version}

- Group Id : org.aspectj Artifact Id : aspectjrt Version : 1.6.7

- Navigate to the “Plugins” page of the POM editor and create the following plugin (you should fill the „GroupId“, „Artifact Id“ and „Version“ fields of the „Plugin Details“ section at that page) :

- Group Id : org.codehaus.mojo Artifact Id : aspectj-maven-pluginVersion : 1.3

- At the “Plugins” page of the POM editor, select the newly created plugin (from the “Plugins” section), and bind it to the compile execution goal. To do so, locate the “Execution” section and create a new execution. At the “Execution Details” section create a new goal and name it “compile”

- The newly created plugin needs one final configuration change. We must define what version of Java runtime environment we are using in order for the AspectJ compiler to properly weave aspect classes. We need to edit the “pom.xml” file to perform the change. Select the “pom.xml” page of the POM editor, locate the newly created plugin and alter it as follows :

<plugin>

<groupId>org.codehaus.mojo</groupId>

<artifactId>aspectj-maven-plugin</artifactId>

<version>1.3</version>

<configuration>

<source>${maven.compiler.source}</source>

<target>${maven.compiler.target}</target>

</configuration>

<executions>

<execution>

<goals>

<goal>compile</goal>

</goals>

</execution>

</executions>

</plugin>

6. Finally change the “maven-compiler-plugin” as shown below :

<plugin>

<groupId>org.apache.maven.plugins</groupId>

<artifactId>maven-compiler-plugin</artifactId>

<version>2.0.2</version>

<configuration>

<source>${maven.compiler.source}</source>

<target>${maven.compiler.target}</target>

</configuration>

</plugin>

As you can see Maven manages library dependencies declaratively. A local repository is created (by default under {user_home}/.m2 folder) and all required libraries are downloaded and placed there from public repositories. Furthermore intra – library dependencies are automatically resolved and manipulated.

The next step is to provide hooks for the web application so as to load the Spring context upon startup.

Locate the „web.xml“ file under /src/main/webapp/WEB-INF and add the following :

For loading the Spring context upon startup,

<listener>

<listener-class>

org.springframework.web.context.ContextLoaderListener

</listener-class>

</listener>

Now lets create the applicationContext.xml file that will drive Spring container. Create the file under /src/main/webapp/WEB-INF directory. An example „applicationContext.xml“ is presented below

<beans xmlns="http://www.springframework.org/schema/beans"

xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xmlns:p="http://www.springframework.org/schema/p"

xmlns:aop="http://www.springframework.org/schema/aop" xmlns:context="http://www.springframework.org/schema/context"

xmlns:jee="http://www.springframework.org/schema/jee" xmlns:tx="http://www.springframework.org/schema/tx"

xmlns:task="http://www.springframework.org/schema/task"

xsi:schemaLocation="

http://www.springframework.org/schema/aop http://www.springframework.org/schema/aop/spring-aop-3.0.xsd

http://www.springframework.org/schema/beans http://www.springframework.org/schema/beans/spring-beans-3.0.xsd

http://www.springframework.org/schema/context http://www.springframework.org/schema/context/spring-context-3.0.xsd

http://www.springframework.org/schema/jee http://www.springframework.org/schema/jee/spring-jee-3.0.xsd

http://www.springframework.org/schema/tx http://www.springframework.org/schema/tx/spring-tx-3.0.xsd

http://www.springframework.org/schema/task http://www.springframework.org/schema/task/spring-task-3.0.xsd">

<context:component-scan base-package="com.javacodegeeks.aspectjspring" />

<bean class="com.javacodegeeks.aspectjspring.aspects.GreetingAspect" factory-method="aspectOf">

<property name="message" value="Hello from Greeting Aspect"/>

</bean>

</beans>

Things to notice here :

- Change the base-package attribute of the context:component-scan element to whatever is the base package of your project so as to be scanned for Springcomponents

- We have to define our aspects in “applicationContext.xml” only if we want to inject dependences to them

- AspectJ denotes the term of “aspect association”. It defines how to manage aspect state. The following state associations are supported:

- Per JVM – one shared aspect instance is constructed and used (default)

- Per object – aspect has its own state per every advised object

- Per control flow – aspect has its own state per particular control flow

All AspectJ aspect classes have “hasAspect()” and “aspectOf()” static methods. These methods are implicitly generated by AspectJcompiler/load time weaver. So, for the default aspect state there is a single aspect instance that can be retrieved using the “aspectOf()” method

- Per JVM – one shared aspect instance is constructed and used (default)

Lets create now the “greeting” Spring service and the relevant “greeting” AspectJ aspect. Create a sub – package named “services” under your main package and place the “GreetingService” class there. An example “greeting” service is shown below :

package com.javacodegeeks.aspectjspring.services;

import org.springframework.stereotype.Service;

@Service("greetingService")

public class GreetingService {

public String sayHello() {

return "Hello from Greeting Service";

}

}

Create a sub – package named “aspects” under your main package and place the “GreetingAspect” class there. An example “greeting” aspect is shown below :

package com.javacodegeeks.aspectjspring.aspects;

import org.aspectj.lang.ProceedingJoinPoint;

import org.aspectj.lang.annotation.Around;

import org.aspectj.lang.annotation.Aspect;

@Aspect

public class GreetingAspect {

private String message;

public void setMessage(String message) {

this.message = message;

}

@Around("execution(* com.javacodegeeks.aspectjspring.services.GreetingService.*(..))")

public Object advice(ProceedingJoinPoint pjp) throws Throwable {

String serviceGreeting = (String) pjp.proceed();

return message + " and " + serviceGreeting;

}

}

Finally locate the main Web page of your project, “index.jsp”, under /src/main/webapp folder and alter it as follows :

<%@ page language="java" import="org.springframework.web.context.WebApplicationContext, org.springframework.web.context.support.WebApplicationContextUtils, com.javacodegeeks.aspectjspring.services.GreetingService"%>

<%@page contentType="text/html" pageEncoding="UTF-8"%>

<!DOCTYPE HTML PUBLIC "-//W3C//DTD HTML 4.01 Transitional//EN"

"http://www.w3.org/TR/html4/loose.dtd">

<%

WebApplicationContext webApplicationContext = WebApplicationContextUtils.getWebApplicationContext(getServletContext());

GreetingService greetingService = (GreetingService) webApplicationContext.getBean("greetingService");

%>

<html>

<head>

<meta http-equiv="Content-Type" content="text/html; charset=UTF-8">

<title>JSP Page</title>

</head>

<body>

<h1>Test service invoked and greets you by saying : <%=greetingService.sayHello()%></h1>

</body>

</html>

Things to notice here :

- Upon page load, we retrieve the Spring Web application context, and lookup our “greeting” service. All we have to do is to invoke the “sayHello()” method to see the combined greeting message from our aspect and the service

To build the application right click on your project → Run As → Maven package

To deploy the web application just copy the „.war“ file from the „target“ directory to Apache – Tomcat “webapps” folder

To lunch the application point your browser to the following address

http://localhost:8080/{application_name}/

If all went well you should see your main web page displaying the following :

“Test service invoked and greets you by saying : Hello from Greeting Aspect and Hello from Greeting Service”

You can download the project from here

Hope you liked it

Justin

25.10.10

Net-SNMP Tutorial -- traps

Traps can be used by network entities to signal abnormal conditions to management stations. The following paragraphs will describe how traps are defined in MIB files, how they are generated by the snmptrap utlity, and how they are received and processed by the snmptrapd utitlity.

Note: as I prefer the OID notations using the MODULE::identifier notation, this is used throughout in the following examples, and the snmptrapd output similarly assumes the -OS option.

Definition of traps

Traps comes in two destinctly different forms, SNMPv1 traps, and SNMPv2 traps (or notifications)The SNMPv1 trap

The SNMPv1 trap is defined in the MIB file using the TRAP-TYPE macro, as in the following exampleTRAP-TEST-MIB DEFINITIONS ::= BEGINThis defines a single enterprise specific trap, that can be issued as follows

IMPORTS ucdExperimental FROM UCD-SNMP-MIB;

demotraps OBJECT IDENTIFIER ::= { ucdExperimental 990 }

demo-trap TRAP-TYPE

STATUS current

ENTERPRISE demotraps

VARIABLES { sysLocation }

DESCRIPTION "This is just a demo"

::= 17

END

and when received by snmptrapd is displayed as follows

% snmptrap -v 1 -c public host TRAP-TEST-MIB::demotraps localhost 6 17 '' \

SNMPv2-MIB::sysLocation.0 s "Just here"

1999-11-12 23:26:07 localhost [127.0.0.1] TRAP-TEST-MIB::demotraps:

Enterprise Specific Trap (demo-trap) Uptime: 1 day, 5:34:06

SNMPv2-MIB::sysLocation.0 = "Just here"

The SNMPv2 notification

The format of the SNMPv2 notification is somewhat different. The definition in the MIB file looks as follows

NOTIFICATION-TEST-MIB DEFINITIONS ::= BEGIN

IMPORTS ucdavis FROM UCD-SNMP-MIB;

demonotifs OBJECT IDENTIFIER ::= { ucdavis 991 }

demo-notif NOTIFICATION-TYPE

STATUS current

OBJECTS { sysLocation }

DESCRIPTION "Just a test notification"

::= { demonotifs 17 }

END

This is a definition that is similar to the SNMPv1 trap given above. Issuing this notification looks as follows

% snmptrap -v 2c -c public localhost '' NOTIFICATION-TEST-MIB::demo-notif \

SNMPv2-MIB::sysLocation.0 s "just here"

and the resulting output from the trap daemon is

1999-11-13 08:31:33 localhost [127.0.0.1]:

SNMPv2-MIB::sysUpTime.0 = Timeticks: (13917129) 1 day, 14:39:31.29

SNMPv2-MIB::snmpTrapOID.0 = OID: NOTIFICATION-TEST-MIB::demo-notif

SNMPv2-MIB::sysLocation.0 = "just here"

Defining trap handlers

The snmptrapd utility has the ability to execute other programs on the reception of a trap. This is controlled by the traphandle directive, with the syntaxNotice, that this only takes an OID to determine which trap (or notification) is received. This means that SNMPv1 traps need to be represented in SNMPv2 format, which is described in RFC 2089. Basically, the OID for our above defined trap is created by taking the ENTERPRISE parameter and adding the sub-ids 0 and 17. Similarly, OID values for the generic SNMPv1 traps are defined to be the same as for SNMPv2.

traphandle OID command

The command specifies a command to be executed by snmptrapd upon reception by the command. This command is executed with the data of the trap as its standard input. The first line is the host name, the second the IP address of the trap sender, and the following lines consists of an OID VALUE pair with the data from the received trap.

A simple shell script to be called from snmptrapd is the following:

#!/bin/sh

read host

read ip

vars=

while read oid val

do

if [ "$vars" = "" ]

then

vars="$oid = $val"

else

vars="$vars, $oid = $val"

fi

done

echo trap: $1 $host $ip $vars

# the generic traps

traphandle SNMPv2-MIB::coldStart /home/nba/bin/traps cold

traphandle SNMPv2-MIB::warmStart /home/nba/bin/traps warm

traphandle IF-MIB::linkDown /home/nba/bin/traps down

traphandle IF-MIB::linkUp /home/nba/bin/traps up

traphandle SNMPv2-MIB::authenticationFailure /home/nba/bin/traps auth

# this one is deprecated

traphandle .1.3.6.1.6.3.1.1.5.6 /home/nba/bin/traps egp-neighbor-loss

# enterprise specific traps

traphandle TRAP-TEST-MIB::demo-trap /home/nba/bin/traps demo-trap

traphandle NOTIFICATION-TEST-MIB::demo-notif /home/nba/bin/traps demo-notif

% snmptrap -v 1 -c public localhost TRAP-TEST-MIB::demotraps localhost 2 0 '' \

IF-MIB::ifIndex i 1

1999-11-13 12:46:49 localhost [127.0.0.1] TRAP-TEST-MIB::traps:

Link Down Trap (0) Uptime: 1 day, 18:54:46.27

IF-MIB::ifIndex.0 = 1

trap: down localhost 127.0.0.1 SNMPv2-MIB::sysUpTime = 1:18:54:46.27, SNMPv2-MIB::snmpTrapOID = IF-MIB::linkDown, IF-MIB::ifIndex.0 = 1, SNMPv2-MIB::snmpTrapEnterprise = TRAP-TEST-MIB::traps

trap: demo-trap localhost 127.0.0.1 SNMPv2-MIB::sysUpTime = 1:19:00:48.01, SNMPv2-MIB::snmpTrapOID = TRAP-TEST-MIB::demo-trap, SNMPv2-MIB::sysLocation.0 = "just here", SNMPv2-MIB::snmpTrapEnterprise = TRAP-TEST-MIB::traps

trap: demo-notif localhost 127.0.0.1 SNMPv2-MIB::sysUpTime.0 = 1:19:02:06.33, SNMPv2-MIB::snmpTrapOID.0 = NOTIFICATION-TEST-MIB::demo-notif, SNMPv2-MIB::sysLocation.0 = "just here"

Generating traps from the agent

The agent is able to generate a few traps by itself. When starting up, it will generate a SNMPv2-MIB::coldStart trap, and when shutting down a UCD-SNMP-MIB::ucdShutDown.These traps are sent to managers specified in the snmpd.conffile, using the trapsink or trap2sink directive (SNMPv1 and SNMPv2 trap respectively)

# send v1 traps

trapsink nms.system.com public

# also send v2 traps

trap2sink nms.system.com secret

# send traps on authentication failures

authtrapenable 1

$ snmpset -c public agent SNMPv2-MIB::snmpEnableAuthenTraps s enable

Note: the current 4.0 version of Net-SNMP does not generate authentication failure traps. This will hopefully be corrected before the next release. [an error occurred while processing this directive]

Setting Up a SNMP Server in Ubuntu

What is net-snmp ?

Simple Network Management Protocol (SNMP) is a widely used protocol for monitoring the health and welfare of network equipment (eg. routers), computer equipment and even devices like UPSs. Net-SNMP is a suite of applications used to implement SNMP v1, SNMP v2c and SNMP v3 using both IPv4 and IPv6.

Net-SNMP Tutorials

http://www.net-snmp.org/tutorial/tutorial-5/

Net-SNMP Documentation

http://www.net-snmp.org/docs/readmefiles.html

# Installing SNMP Server in Ubuntu #####

$ sudo apt-get install snmpd

# Configuring SNMP Server #####

/etc/snmp/snmpd.conf - configuration file for the Net-SNMP SNMP agent.

/etc/snmp/snmptrapd.conf - configuration file for the Net-SNMP trap daemon.

Set up the snmp server to allow read access from the other machines in your network for this you need to open the file /etc/snmp/snmpd.conf change the following Configuration and save the file.

$ sudo gedit /etc/snmp/snmpd.conf

snmpd.conf

#---------------------------------------------------------------

######################################

# Map the security name/networks into a community name.

# We will use the security names to create access groups

######################################

# sec.name source community

com2sec my_sn1 localhost my_comnt

com2sec my_sn2 192.168.10.0/24 my_comnt

####################################

# Associate the security name (network/community) to the

# access groups, while indicating the snmp protocol version

####################################

# sec.model sec.name

group MyROGroup v1 my_sn1

group MyROGroup v2c my_sn1

group MyROGroup v1 my_sn2

group MyROGroup v2c my_sn2

group MyRWGroup v1 my_sn1

group MyRWGroup v2c my_sn1

group MyRWGroup v1 my_sn2

group MyRWGroup v2c my_sn2

#######################################

# Create the views on to which the access group will have access,

# we can define these views either by inclusion or exclusion.

# inclusion - you access only that branch of the mib tree

# exclusion - you access all the branches except that one

#######################################

# incl/excl subtree mask (opcional)

view my_vw1 included .1 80

view my_vw2 included .iso.org.dod.internet.mgmt.mib-2.system

#######################################

# Finaly associate the access groups to the views and give them

# read/write access to the views.

#######################################

# context sec.model sec.level match read write notif

access MyROGroup "" any noauth exact my_vw1 none none

access MyRWGroup "" any noauth exact my_vw2 my_vw2 none

# -----------------------------------------------------------------------------

# Give access to other interfaces besides the loopback #####

$ sudo gedit /etc/default/snmpd

find the line:

SNMPDOPTS='-Lsd -Lf /dev/null -u snmp -I -smux -p /var/run/snmpd.pid 127.0.0.1'

and change it to:

SNMPDOPTS='-Lsd -Lf /dev/null -u snmp -I -smux -p /var/run/snmpd.pid'

# Restart snmpd to load de new config #####

$ sudo /etc/init.d/snmpd restart

# Test the SNMP Server #####

$ sudo apt-get install snmp

$ sudo snmpwalk -v 2c -c my_comnt localhost system

http://myhowtosandprojects.blogspot.com/2009/04/what-is-net-snmp-simple-network.html

24.10.10

Don’t Install Spring Roo at Global Directory without Writing Permission (Linux)

Reason:

1 #!/bin/sh

2

3 PRG="$0"

4

5 while [ -h "$PRG" ]; do

6 ls=`ls -ld "$PRG"`

7 link=`expr "$ls" : '.*-> \(.*\)$'`

8 if expr "$link" : '/.*' > /dev/null; then

9 PRG="$link"

10 else

11 PRG=`dirname "$PRG"`/"$link"

12 fi

13 done

14 ROO_HOME=`dirname "$PRG"`

15

16 # Absolute path

17 ROO_HOME=`cd "$ROO_HOME/.." ; pwd`

35 ROO_OSGI_FRAMEWORK_STORAGE="$ROO_HOME/cache"

36 # echo "ROO_OSGI_FRAMEWORK_STORAGE: $ROO_OSGI_FRAMEWORK_STORAGE"

37

38 ROO_AUTO_DEPLOY_DIRECTORY="$ROO_HOME/bundle"

39 # echo "ROO_AUTO_DEPLOY_DIRECTORY: $ROO_AUTO_DEPLOY_DIRECTORY"

40

41 ROO_CONFIG_FILE_PROPERTIES="$ROO_HOME/conf/config.properties"

42 # echo "ROO_CONFIG_FILE_PROPERTIES: $ROO_CONFIG_FILE_PROPERTIES"

22.10.10

Creating Application using Spring Roo and Deploying on Google App Engine

http://java.dzone.com/articles/creating-application-using

Spring Roo is an Rapid Application Development tool which helps you in rapidly building spring based enterprise applications in the Java programming language. Google App Engine is a Cloud Computing Technology which lets you run your application on Google's infrastructure. Using Spring Roo, you can develop applications which can be deployed on Google App Engine. In this tutorial, we will develop a simple application which can run on Google App Engine.

Roo configures and manages your application using the Roo Shell. Roo Shell can be launched as a stand-alone, command-line tool, or as a view pane in the SpringSource Tool Suite IDE.

Prerequisite

Before we can start using Roo shell, we need to download and install all pre-requites.

- Download and install SpringSource Tool Suite 2.3.3. M2. Spring Roo 1.1.0.M2 comes bundled with STS. While installing STS, installer asks for the location where STS should be installed. In that directory, it will created a folder with name "roo-%release_number%" which will contain roo stuff. Add %spring_roo%/roo-1.1.0.M2/bin in your path so that when you can fire roo commands from command line.

- Start STS and go to the Dashboard (Help->Dashboard)

- Click on the Extensions tab

- Install the "Google Plugin for Eclipse" and the "DataNucleus Plugin".

- Restart STS when prompted.

After installing all the above we can start building application.

ConferenceRegistration.Roo Application

Conference Registration is a simple application where speaker can register themselves and create a session they want to talk about. So, we will be having two entities Speaker and Presentation. Follow the instructions to create the application:

- Open your operating system command-line shell

- Create a directory named conference-registration

- Go to the conference-registration directory in your command-line shell

- Fire roo command. You will see a roo shell as shown below. Hint command gives you the next actions you can take to manage your application.

- Type the hint command and press enter. Roo will tell you that first you need to create a project and for creating a project you should type 'project' and then hit TAB. hint command is very useful as you don't have to cram all the commands it will always give you the next logical steps that you can take at that point.

- Roo hint command told us that we have to create the project so type the project command as shown below

1.project --topLevelPackage com.shekhar.conference.registration --java 6This command created a new maven project with top-level package name as com.shekhar.conference.registration and created directories for storing source code and other resource files. In this command, we also specified that we are using Java version 6.

- Once you have created the project, type in the hint command again, roo will tell you that now you have to set up the persistence. Type the following command

1.persistence setup --provider DATANUCLEUS --database GOOGLE_APP_ENGINE --applicationId roo-gaeThis command set up all the things required for the persistence. It creates persistence.xml, adds all the dependencies required for persistence in pom.xml. We have chosen provider as DATANUCLEUS and database as GOOGLE_APP_ENGINE because we are developing our application for Google App Engine and it uses its own datastore. applicationId is also required when we deploy our application to Google App Engine. Now our persistence set up is completed.

- Type the hint command again, roo will tell you that you have to create entities now. So, we need to create our entities Speaker and Presentation. To create Speaker entity, we will type the following commands

1.entity --class ~.domain.Speaker --testAutomatically2.field string --fieldName fullName --notNull3.field string --fieldName email --notNull --regexp ^([0-9a-zA-Z]([-.\w]*[0-9a-zA-Z])*@([0-9a-zA-Z][-\w]*[0-9a-zA-Z]\.)+[a-zA-Z]{2,9})$4.field string --fieldName city5.field date --fieldName birthDate --type java.util.Date --notNull6.field string --fieldName bioThe above six lines created an entity named Session with different fields. In this we have used notNull constraint, email regex validation, date field. Spring Roo on app engine does not support enum and references yet which means that you can't define one-one or one-to-many relationships between entities yet. These capabilities are supported on Spring MVC applications but Spring MVC applications can't be deployed on app engine as if now. Spring Roo jira has these issues. They will be fixed in future releases(Hope So :) ).

- Next create the second entity of our application Presentation. To create Presentation entity type the following commands on roo shell

1.entity --class ~.domain.Presentation --testAutomatically2.field string --fieldName title --notNull3.field string --fieldName description --notNull4.field string --fieldName speaker --notNullThe above four lines created a JPA entity called Presentation, located in the domain sub-package, and added three fields -- title,description and speaker. As you can see, speaker is added as a String (just enter the full name). Spring Roo on Google App Engine still does not support references.

- Now that we have created our entities, we have to create the face of our application i.e. User Interface. Currently, only GWT created UI runs on app engine. So, we will create GWT user interface. To do that type

1.gwt setupThis command will add the GWT controller as well as all the UI required stuff. This command may take couple of minutes if you don't if you don't have those dependencies in your maven repository.

- Next you can configure the log4j to Debug level using the following command

1.logging setup --level DEBUG - Quit the roo shell

- You can easily run your application locally if you have maven installed on your system, simply type "mvn gwt:run" at your command line shell while you are in the same directory in which you created the project. This will launch the GWT development mode and you can test your application. Please note that applications does not run in Google Chrome browser when you run from your development environment. So, better run it in firefox.

- To deploy your application to Google App Engine just type

1.mvn gwt:compile gae:deployIt will ask you app engine credentials(emailid and password).

ConferenceRegistration.Roo Application

Conference Registration is a simple application where speaker can register themselves and create a session they want to talk about. So, we will be having two entities Speaker and Presentation. Follow the instructions to create the application:

I hope this tutorial will help you in building applications which can be deployed on Google App Engine using Spring Roo. You can test the application @ http://roo-gae.appspot.com/

21.10.10

F*** U Microsoft!!

I really hate the new version of MSN, or Live Messenger. What I want is only a simple Instant Message system to talk to my friends, or co-workers. Why do you show me such a big screen to distract my attention and put me into trouble!

F*** U, Microsoft. Go to hell!

Maven: Such a Good Thing!

When I was still away from maven, I could not even imagine there is a powerful thing in the world. What does really matter is the idea of global, but not centralized repository.

There are thousands of plugin system in the world, but I would like to regard Maven is the most successful one. I don’t know where this success comes from, and why people want to contribute to such a specific plugin system. But, apparently, people did, and are still doing.

For the finial deployed system, it is alright to have a complicated one and you can establish the system elaborately. But for the development system, from my own point of view, I need to have a simple set up procedure, a short test cycle, and integrated environment. Maven gives me all these.

Playing with Spring-Roo

Actually, I like this type of things. Editing XML configuration files for Spring or Hibernate does not really make sense to me, and most of time, just bothers me. And I really like command line things, although I hate Windows DOS prompt style command lines.

It is good for me to come with such a simple get-started page:

http://www.springsource.org/roo/start

Specifically saying, it is very good to finish my first trial by just looking at the following lines of things:

mkdir hello

cd hello

roo

roo> hint

roo> project --topLevelPackage com.foo

roo> persistence setup --provider HIBERNATE --database HYPERSONIC_IN_MEMORY

roo> entity --class ~.Timer --testAutomatically

roo> field string --fieldName message --notNull

roo> hint controllers

roo> controller all --package ~.web

roo> selenium test --controller ~.web.TimerController

roo> gwt setup

roo> perform tests

roo> quit

See what the narrator says here:

Wow! You've now got a webapp complete with JUnit tests, Selenium tests, a MVC front-end, a Google Web Toolkit frontend. Just use “mvn gwt:run” to play with the GWT client, or use “mvn tomcat:run” to start the Tomcat MVC front-end.

Yes, that is it! Although you might thing it is just a toy program, but it is the base for a real software, and I can build my things based on it.

And it is also good to have another simple blog page in Chinese to share me such knowledge:

20.10.10

Contrasting Nexus and Artifactory

I just came across JFrog’s Artifactory and found is very similar to Sonatype’s Nexus, therefore, I searched on the Google using these two names and found the following article. It’s a little bit old and came from Sonatype, but it is worthy to take a look at.

Contrasting Nexus and Artifactory

January 19th, 2009By Brian Fox

Today’s Maven users have two solid choices when it comes to repository managers:Sonatype’s Nexus and JFrog’s Artifactory. While we are convinced that Nexus is the better choice, we’re especially happy to see that there is competition in this market. Competition leads to more efficient markets for software and more “accountability” to the end-user. Nexus and Artifactory have much more in common than not, but we think the differences are important to understand as they have dramatic impacts on performance and scalability. In this post, I contrast some of the design decisions made in the construction of Artifactory with the design decisions we made when developing Nexus.

Today’s Maven users have two solid choices when it comes to repository managers:Sonatype’s Nexus and JFrog’s Artifactory. While we are convinced that Nexus is the better choice, we’re especially happy to see that there is competition in this market. Competition leads to more efficient markets for software and more “accountability” to the end-user. Nexus and Artifactory have much more in common than not, but we think the differences are important to understand as they have dramatic impacts on performance and scalability. In this post, I contrast some of the design decisions made in the construction of Artifactory with the design decisions we made when developing Nexus.

Contrast #1: Network: WebDAV vs. REST

The first major difference is that Artifactory uses Jackrabbit as a WebDAV implementation for artifact uploads. Nexus implements a simple, lightweight HTTP PUT via Restlet instead. We had a WebDAV implementation in early Alpha releases but found it to be far too heavy and slow. Switching to a simple REST call improved our performance and significantly decreased the memory footprint. A profile of the memory use in Artifactory that I ran on previous versions showed that the majority of the object creation and memory allocation is related to Jackrabbit. Sure, you can’t mount a Nexus repository with a webfolder using WebDAV, but is that really what you need a repository manager to do, or would you rather it be blazingly fast doing Maven builds? It is possible to use the lightweight wagon against Artifactory (http vs dav:http), but the choice of Jackrabbit in our opinion is overhead that isn’t needed.

Contrast #2: Storage: Relational Database vs. Filesystem

The second major difference is that Nexus deliberately chooses to use a regular Maven 2 repository layout to store the data on disk. Doing this effectively isn’t always easy and we’ve had many discussions about it amongst the team, but I hold fast to this approach for several reasons:

- It makes importing and exporting the repositories a no brainer. Simply copy the data into the correct folder in the Nexus work folder and you’re done, import finished. Copy it out, export done.

- The incremental nature of the file changes in a Maven 2 repository layout makes it extremely well suited for incremental backups to tape or other archiving medium.

- Nexus also keeps its metadata (not to be confused with the maven-metadata) separate from the artifacts, and the data is rebuilt on the fly if it’s missing. If you are unlucky and have some hardware or disk error, you will likely only get one file corrupted, not the entire repository.

- Having the metadata separate means Nexus upgrades don’t have to touch any data in the repository folder. Upgrades and rollbacks of the system can happen as fast as you can stop one instance and start the next.

Artifactory takes the polar opposite approach and stores the metadata and the artifacts themselves in a huge database. The reason they claim it’s needed is for transactional behavior. Using a database doesn’t guarantee transactionality and it certainly isn’t the only way to get transactional behavior.

In order to use a database, Artifactory needs to have import and export tools. The imports and exports of this data are reported to take a significant amount of time (http://issues.jfrog.org/jira/browse/RTFACT-317). Some upgrades require a full dump and re-import of the database, taking out large systems for a significant amount of time. Also, what happens if you need to tweak or repair a file in the system? Break out your dba books and go to town. How about incremental backups? Would you be happy if a single disk error made your entire repository garbage?

We feel strongly that introducing a repository manager into your system shouldn’t require a dba to manage the data. Quite reliable backups can be performed with Nexus using robocopy or rsync tools and a simple script, and transactions can be obtained with much less overhead. In fact, with the Staging plugin, Nexus is able to turn an entire multimodule build into a single transaction. There are ways to implement “transactional” interactions in a piece of software without having to throw everything into a database. We think that loading the entire contents of a repository into Jackrabbit and modelling the repository in a relational database is introducing much more complexity into the problem than is necessary.

Contrast #3: Storage Size

It has also been reported that the indexes and metadata introduced by Artifactory can double or triple the size of a repo. See this thread for real examples. Perhaps that’s manageable on a 1gb repo, but how about something like Central at 60+ gb? Nexus uses the Nexus-indexer (Artifactory uses it as well to provide search capability) which is just a Lucene index. We can provide indexes of Central that are only 30mb…not double the size of the repo itself. Note that the Nexus indexes also include cross references of the Java classes contained in the jars. Once again, we think involving a relational database into this problem is an unnecessarily complicating factor.

A preliminary test import of a 116mb release repo took 5 minutes and the resulting data size was 323mb (2.78x the original size). Extrapolating that out to a 4gb repository gets you about 3 hours of import and a total data size of 11gb. Sure disks are cheap these days, but still tripling the size of your data has many long term ramifications when you consider backups, replication etc.

Note: The actual import I ran failed 3 times on data from central due to too strict checking, I had to prune or repair the files just to get it to import. Fortunately that didn’t happen midway through a 3 hour import…. which leads me to the next point…

Contrast #4: Nexus Doesn’t Interfere

We believe that Nexus shouldn’t interfere with your builds. We all know that the data in remote repos like Central can be incomplete. However, if Maven is able to use it, then we make sure Nexus won’t get in the way. Artifactory proactively blocks any data that isn’t parse-able as it comes through as a feature. This means you may have a build that works without Artifactory and breaks with it because it refuses to proxy (and apparently import) any files it doesn’t like. Nexus will report that there’s a problem to the admins to deal with, but won’t cause Maven to blow up for the developer. Nexus favors stability over correctness for proxy repositories.

Download Nexus Today

Nexus is available as an open source project for free. There is also a Pro version that includes additional commercial level functionality and professional support and for only $2995 per server. While the Open Source version is capable and popular, Nexus Professional adds some new features that are targeted at Enterprise Users: staging, procurement, and LDAP integration.

http://www.sonatype.com/people/2009/01/contrasting-nexus-and-artifactory/

11.10.10

SNMP Trap Example Shipped with SNMP4J

http://www.snmp4j.org/doc/org/snmp4j/package-summary.html

SNMPv1 TRAP PDU

import org.snmp4j.PDUv1;

...

PDUv1 pdu = new PDUv1();

pdu.setType(PDU.V1TRAP);

pdu.setGenericTrap(PDUv1.COLDSTART);

...

SNMPv2c/SNMPv3 INFORM PDU

import org.snmp4j.ScopedPDU;

...

ScopedPDU pdu = new ScopedPDU();

pdu.setType(PDU.INFORM);

// sysUpTime

long sysUpTime = (System.currentTimeMillis() - startTime) / 10;

pdu.add(new VariableBinding(SnmpConstants.sysUpTime, new TimeTicks(sysUpTime)));

pdu.add(new VariableBinding(SnmpConstants.snmpTrapOID, SnmpConstants.linkDown));

// payload

pdu.add(new VariableBinding(new OID("1.3.6.1.2.1.2.2.1.1"+downIndex),

new Integer32(downIndex)));

...

Multi Threaded Trap Receiver using SNMP4J

http://www.ashishpaliwal.com/blog/2008/12/multi-threaded-trap-receiver-using-snmp4j/

Implementing Trap Sender using SNMP4J

In this post, we shall implement a Trap Sender using SNMP4J. We may choose to use Apache MINA for sending Traps or can resort to using DatagramSocket class directly.

This shall be the logical flow of the implementation

- Get the encoded Trap Data

- Send the Trap

Lets look at the first component, on getting the encoded Trap data

The code snippet above shows a simple way of creating and encoding a Trap PDU. Essentially, we create an instance of PDU class and sets the type as Trap. This is important, else SNMP4J shall throw an exception. Thereafter, we can set the trap parameters. Here, we have hardcoded the parameters, there can be custom implementations that can take these from config files or from UI. After setting the parameters, we just call the encode function passing the Output stream and collect the byte array to be sent.

Sending part is even simpler

http://www.ashishpaliwal.com/blog/2008/10/implementing-trap-sender-using-snmp4j/

In-memory backend for Derby 10.5

Derby 10.5 contains a long-awaited feature: an in-memory storage backend, your entire database will be stored in main memory instead of on disk.

http://blogs.sun.com/kah/entry/derby_10_5_preview_in

Tuesday Apr 21, 2009

Derby 10.5 preview: In-memory backend

The upcoming Derby 10.5 release will contain a long-awaited feature: an in-memory storage backend. With this backend, your entire database will be stored in main memory instead of on disk.

But isn't the whole point of using a database that the data should be stored safely on disk or some other kind of persistent storage? Normally, yes, but there are cases where you don't really care if you lose the database when the application crashes.

For instance, if you are running unit tests against your application, it's probably more important that the tests run fast and that it's easy to clean up after the tests. With the in-memory storage backend, you'll notice that many database operations (like database creation, inserts and updates) are a lot faster because they don't need to access the disk. Also, there's no need to clean up and delete the database files after the tests have completed, since the database goes away when the application terminates.

So how is the in-memory backend enabled? That's simple, you just add the memory subprotocol to the JDBC connection URL, and no other changes should be needed to your application. If you normally connect to the database with the URL jdbc:derby:MyDB you should instead use jdbc:derby:memory:MyDB (and of course add any of the connection attributes needed, like create=true to create the database). Here's an example in IJ, Derby's command line client:

$ java -jar derbyrun.jar ij

ij version 10.5

ij> connect 'jdbc:derby:memory:MyDB;create=true';

ij> create table my_table(x int);

0 rows inserted/updated/deleted

ij> insert into my_table values 1, 2, 3;

3 rows inserted/updated/deleted

ij> exit;

After exiting, you can verify that no database directory was created:

$ ls MyDB

MyDB: No such file or directory

More or less everything you can do with an ordinary database should be possible to do with an in-memory database, including taking a backup and restoring it. This can be useful, as it allows you to dump the database to disk before you shut down your application, and reload it into memory the next time you start the application. Looking again at the example above, you could issue this command before typing exit in IJ:

ij> call syscs_util.syscs_backup_database('/var/backups');

0 rows inserted/updated/deleted

Later, when you restart the application, you can load the database back into the in-memory store by using the createFrom connection attribute:

$ java -jar derbyrun.jar ij

ij version 10.5

ij> connect 'jdbc:derby:memory:MyDB;createFrom=/var/backups/MyDB';

ij> select * from my_table;

X

-----------

1

2

3

3 rows selected

Posted at 10:50AM Apr 21, 2009 by Knut Anders Hatlen in Java DB | Comments[4] | Permalink

30.9.10

The Simplest SNMP Agent Implementation Using SNMP4J Agent

If you are looking for a very simple SNMP Agent implementation using SNMP4J Agent library, here it .

http://code.google.com/p/springside-sub/source/browse/#svn/trunk/tiny-examples/simple-snmp-agent

Essential Elements

To start an SNMP Agent, I need the following essential elements:

- Daemon information and daemon

- Authentication

With SNMP4J, you can find the following terminologies / mappings:

| Authentication | Community |

| Network Daemon | Transport |

BaseAgent

BaseAgent is usually the start point for programming an SNMP4J Agent. And it is a good idea to understand all properties of this class. Interestingly,

- MIBs

- Maanageable Object Server

- Proxy Forwarder

MIBs

protected SNMPv2MIB snmpv2MIB;

protected SnmpFrameworkMIB snmpFrameworkMIB;

protected SnmpTargetMIB snmpTargetMIB;

protected SnmpNotificationMIB snmpNotificationMIB;

protected SnmpProxyMIB snmpProxyMIB;

protected SnmpCommunityMIB snmpCommunityMIB;

protected Snmp4jLogMib snmp4jLogMIB;

protected Snmp4jConfigMib snmp4jConfigMIB;

protected UsmMIB usmMIB;

protected VacmMIB vacmMIB;

29.9.10

Starts the Test Agent of SNMP4J-Agent Project

If you want to start the test agent of SNMP4J-Agent simply by entering the following command:

$mvn exec:java –Dexec.mainClass=org.snmp4j.agent.test.TestAgent

You will get the following Error:

java.lang.NoClassDefFoundError: org/snmp4j/event/CounterListener

Caused by: java.lang.ClassNotFoundException: org.snmp4j.event.CounterListener

at java.net.URLClassLoader$1.run(URLClassLoader.java:200)

at java.security.AccessController.doPrivileged(Native Method)

at java.net.URLClassLoader.findClass(URLClassLoader.java:188)

at java.lang.ClassLoader.loadClass(ClassLoader.java:307)

at sun.misc.Launcher$AppClassLoader.loadClass(Launcher.java:301)

at java.lang.ClassLoader.loadClass(ClassLoader.java:252)

at java.lang.ClassLoader.loadClassInternal(ClassLoader.java:320)

Could not find the main class: org.snmp4j.agent.test.TestAgent. Program will exit.

Exception in thread "main"

To solve the problem, please modify the pom.xml file and remove the scope of two dependencies:

<dependency>

<groupId>log4j</groupId>

<artifactId>log4j</artifactId>

<version>1.2.14</version>

<!--

<scope>provided</scope>

-->

</dependency>

<dependency>

<groupId>org.snmp4j</groupId>

<artifactId>snmp4j</artifactId>

<version>1.11.1</version>

<!--

<scope>provided</scope>

-->

</dependency>

The Batch Command to Execute SNM4J

@echo off

set CMD_LINE_ARGS=

:setArgs

if ""%1""=="""" goto doneSetArgs

set CMD_LINE_ARGS=%CMD_LINE_ARGS% %1

shift

goto setArgs

:doneSetArgs

rem %CMD_LINE_ARGS%

java -cp lib\log4j-1.2.14.jar;dist\snmp4j-1.11.1.jar org.snmp4j.tools.console.SnmpRequest %CMD_LINE_ARGS%

Basic Encoding Rule (BER)

http://en.wikipedia.org/wiki/Basic_Encoding_Rules

The Basic Encoding Rules (BER) is one of the encoding formats defined as part of the ASN.1 standard specified by the IT in X.690.

TLV encoding

TLV: Type + Length + Value

I developed many protocols using TLV encoding in my previous jobs. It’s very flexible and efficient.

However, in my designs, the structures are flat. The encoding of a PDU consists of cascaded TLV encodings, encapsulating types are SEQUENCE, SET and CHOICE.

More over, in my designs, types are 32 bits integers, while other people develop the type as an octet specifying the characteristics of the value field. The reason is very simple: I usually don’t care too much about the network efficiency, while the public protocol designer must take good care about it.

Class bits

00 – Universal

The value is of a type native to ASN.1.

01 – Application

The Application class is only valid for one specific application.

10 – Context specific

Context-specific depends on the context (such as within sequence, set of choice)

11 – Private

Private can be defined in private specifications.

Universal Class Tags

http://luca.ntop.org/Teaching/Appunti/asn1.html

| Name | P/C | Number (decimal) | Number (hexadecimal) |

|---|---|---|---|

| EOC (End-of-Content) | P | 0 | 0 |

| BOOLEAN | P | 1 | 1 |

| INTEGER | P | 2 | 2 |

| BIT STRING | P/C | 3 | 3 |

| OCTET STRING | P/C | 4 | 4 |

| NULL | P | 5 | 5 |

| OBJECT IDENTIFIER | P | 6 | 6 |

| Object Descriptor | P | 7 | 7 |

| EXTERNAL | C | 8 | 8 |

| REAL (float) | P | 9 | 9 |

| ENUMERATED | P | 10 | A |

| EMBEDDED PDV | C | 11 | B |

| UTF8String | P/C | 12 | C |

| RELATIVE-OID | P | 13 | D |

| SEQUENCE and SEQUENCE OF | C | 16 | 10 |

| SET and SET OF | C | 17 | 11 |

| NumericString | P/C | 18 | 12 |

| PrintableString | P/C | 19 | 13 |

| T61String | P/C | 20 | 14 |

| VideotexString | P/C | 21 | 15 |

| IA5String | P/C | 22 | 16 |

| UTCTime | P/C | 23 | 17 |

| GeneralizedTime | P/C | 24 | 18 |

| GraphicString | P/C | 25 | 19 |

| VisibleString | P/C | 26 | 1A |

| GeneralString | P/C | 27 | 1B |

| UniversalString | P/C | 28 | 1C |

| CHARACTER STRING | P/C | 29 | 1D |

| BMPString | P/C | 30 | 1E |

Usage

Despite its perceived problems, BER is a popular format for transmitting data, particularly in systems with different native data encodings.

- The SNMP protocol specifies ASN.1 with BER as its required encoding scheme.